Google's DeepMind, the creators of AlphaZero, have written another paper about machine learning applied to chess. But this time it had nothing to do with AlphaZero. Instead it was about a chess engine achieving GM level strength without calculating any moves ahed.

I knew that LC0 is very strong on only 1 node (which means that it only evaluates the current position and doesn't look ahead), so I wasn't surprised that it's possible to create an engine without a search that reaches grandmaster strength. However, I was quite surprised by the way that DeepMind did it.

How it Works

Their goal was to show that a complex algorithm, like the evaluations of Stockfish, can be replicated by a neural network.

In order to achieve that level of play, they downloaded 10 million games played on Lichess and extracted every position from them. Then they evaluated every legal move of every position with Stockfish 16 for 50 milliseconds. This resulted in over 15 billion evaluations (which would have taken roughly 8680 days of unparallelized Stockfish evaluation time!). Their model was trained on 530 million pairs of positions with their best move according to Stockfish.

The model then uses these pairs in order to learn what move is best in any given position. This is trivial, if the position has appeared in the training dataset, but the point is that the model should be able to generalize the information it was fed and perform well in positions it has never seen before.

DeepMind achieved that and the model reached about grandmaster level in blitz games.

Results

Games

The engine was tested by playing games on Lichess against other bots and human players. Against bots, their search-less engine reached a rating of 2299 and against humans a whopping 2895!

They explain the discrepancy by the fact that the two rating pools are probably quite different and that other bots are way more punishing when it comes to tactical oversights, which can easily happen with an engine that isn't calculating. Also the engine has some issues converting completely winning games, so bots might safe a draw from a hopeless position, where human players would have resigned much earlier.

Puzzles

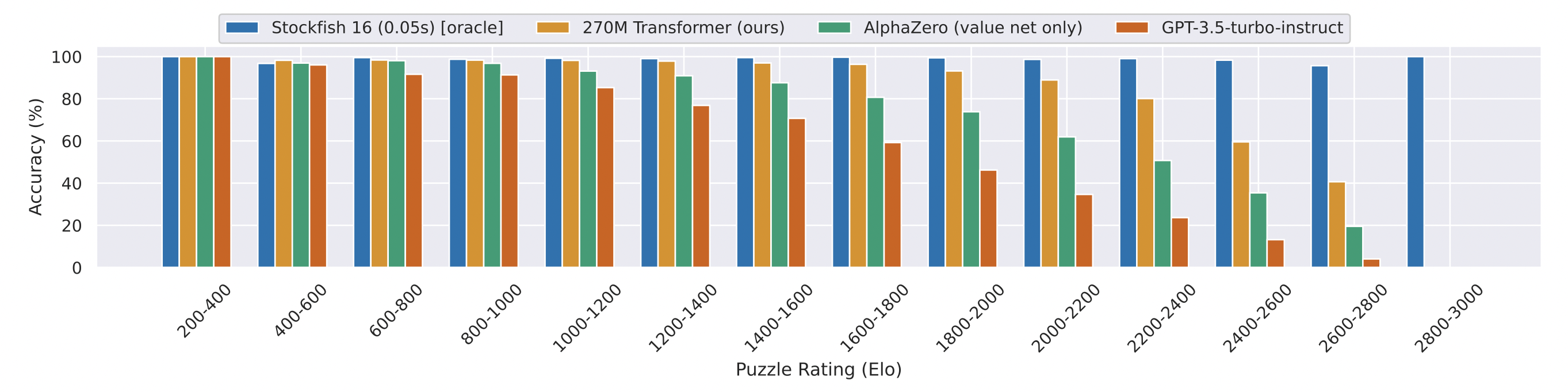

They also tested the performance of their engine on Lichess puzzles of different ratings. They compared their model to Stockfish 16 with a time limit of 50 ms (so the version used to train the model), the value network of AlphaZero trained on 44 million games and GPT-3.5-turbo-instruct. Below is their graph of the performance of these different programs on 10.000 Lichess puzzles.

Games by the Engine

At the end of the paper, the team has added 7 games played by the engine, which you can find in this Lichess study.

You can also check out GM Matthew Sadler's video on Youtube if you want his thoughts about some of the games and the engine itself.

According to the paper, the engine plays aggressively and likes to sacrifice material for long-term compensation. It also disagrees sometimes with the evaluations from Stockfish and makes some tactical mistakes.

Finally, note that the goal of the paper wasn't to create the strongest chess engine possible. Instead, it was to show that a complex algorithm, like the evaluation of Stockfish, can be replicated by a feed-forward neural network.